(This post was written back in October. I had it written, but didn’t get it edited before getting fired, so it’s just been sitting on my drive gathering digital dust. It’s still good information, but just keep in mind that the timing is off. Everything I’m talking about happening in the present actually happened almost half a year ago.)

Welcome to another week. This week was a little rough on the development front. One of the other, more experienced, developers is drowning in projects (actually, most of the more experienced developers are drowning in projects pretty much all of the time).

Being a naturally helpful sort, and possibly not nearly as smart as I like to think I am, I offered to give him a hand with what I thought was the easiest part of his task list, new rules in the rule engine that we used to provide all kinds of customization to the user experience.

As it turns out, the rules engine is pretty complicated because it’s called multiple times—from multiple different points inside of the code base—and therefore there is a lot of different context that needs to be understood in order to effectively write the rules.

I suspect that there are many things that the other developers are doing which are more complicated than the rules engine, but it’s feeling like I’m very much in over my head right now.

The week (when I wasn’t helping out with accounting tasks that haven’t fully transitioned away from me yet) was spent with me taking my best stab at writing new rules, sending them over to my manager (who is the one who wrote the rules engine) to approve and having him kick them back to me with a verbal explanation as to why they won’t work.

He’s not doing anything wrong—he’s been really patient and awesome, but it’s still a little wearing to continually come up short (inside the privacy of my own mind if not necessarily with regards to his expectations yet).

Added to the less awesomeness of the week is that I haven’t made very much progress on my side projects. So far, I’ve created a simple express app from scratch, got it working on my local box, and successfully loaded it up to Heroku (to serve as the cloud-based compute infrastructure).

I’ve also created a MySQL database using Google Cloud as my infrastructure provider, downloaded SSL certs, used those certs to connect from my local box to the database (using Navicat since that’s what I was using previously at work while doing the accounting).

That all feels like pretty good progress, but it all happened last week. Partially that’s because I’ve been putting in extra hours at work trying to get my arms around the rules engine, and partially that’s because I’ve been stuck on how to use Sequelize to connect to the database while using an SSL cert.

I think I’ve finally found a guide that is pointing me at the right direction as far as that’s going, so I’m hoping to make more progress later today on connecting my app to the database and making it stateful, but right now I need to be working on a blog post, and the logical thing to me would be to share the steps that I used to create my express app and push it up to Heroku.

There are tons of videos out there talking about how to do something like this, but my preferred way of consuming that kind of stuff is in written form. It saves me having to pause and repeat stuff all of the time as I’m trying to follow along with what the presenter is doing.

Putting my steps up on the blog has the benefit of checking the box on the blog post that I’m supposed to be writing today. Even better, it means that anyone who prefers written guides to video guides will have the ability to search down my guide. And, for a third (admittedly minor in this day and age of cloud-based backups) benefit, it means that I’ll have me steps recorded for future use in case I need them.

On to the guide:

Preliminaries:

1. I’m working on a Mac (more regarding that in a future post)

2. I’ve installed the Heroku command line interface using Brew

3. I’ve created a Heroku account and a GitHub account

4. I’m using Intellij. You can use Sublime or another editor of your choice.

The actual process:

1. Create a new repository on GitHub.com (or with Git desktop)

a. Say to initialize it with a readme

b. Fetch your new repository from Git. (I use Git Desktop to pull it down to my local machine)

c. I have a services directory, and then put each Git repository as a subfolder inside of services.

2. Create a .gitignore file at the root directory of your project.

a. For me this is in Services\Storage-App

b. At this point, I got a message from Intellij asking if I wanted to add .gitignore (if I wanted it to be part of the git repository). I said yes, but said no on all of the stuff that it asked about in the .idea/ folder.

c. I populated .gitignore as per this example:

d. https://github.com/expressjs/express/blob/master/.gitignore

# OS X

.DS_Store*

Icon?

._*

# npm

node_modules

package-lock.json

*.log

*.gz

.idea

.idea/

# environment variables

env*.yml

e. .idea and .idea/ are there because I’m using Intellij. The link above has options for windows or linux.

3. Run npm install express -g from the command line inside of the project folder.

a. This downloads and installs express (I’m pretty sure that you only have to run this the first time that you want to add express to an app).

4. Run npm init from the command line inside of the project folder.

a. This creates the package.json file that lists your dependacies and tells the computer what to use as your starting file.

b. I used all just the default options other than changing index.js to app.js. You can use either option.

5. Run npm install express –save from the command line inside of the project folder.

a. This brings a whole bunch of express dependencies into the project. (in the node_modules folder)

b. You should now have “express”: “^4.16.3” (or a later or earlier version depending on what version of express you have installed) in your list of dependencies in package.json.

6. Create app.js inside of the root directory (same level as package.json and package-lock.json)

a. I did this via Intellij. You should in theory be able to just do it from inside the command line via touch app.js if you wanted to.

7. Inside of app.js add the following lines:

const express = require(‘express’);

const app = express();

const normalizePort = port => parseInt(port, 10);

const PORT = normalizePort(process.env.PORT || 5000);

app.get(‘/’, function(req, res) {

res.send(‘Hello World’);

}).listen(PORT);

console.log(“Waiting for requests. Go to LocalHost:5000”);

8. Inside of package.json at the end of the “test” line, put a comma and then add a new line:

a. “start”: “node app.js”

9. Inside the app directory, type npm start

a. (You should see “Waiting for requests. Go to LocalHost:5000” in the terminal)

10. Open a browser window and got to http://localhost:5000/

a. (you should see “Hello World” in the browser)

b. This means that you’ve successfully run the app on your local machine

11. Create a Procfile at the root level.

a. Input (into the proc file) web: node app.js

12. Push the app up to heroku

a. Change to the directory containing the app.

b. Type git init

c. heroku create $APP_NAME –buildpack heroku/nodejs

i. I left the app name blank and just let Heroku create a random name.

ii. That means my command was heroku create –buildpack Heroku/nodejs

d. git add .

e. git commit -m “Ready to push to Heroku”

i. You should also be able to do the commit via github desktop.

f. git push heroku master

g. heroku open

i. This should open your browser and show you “Hello World”.

h. You’ve successfully pushed the app up to Heroku. Congratulations!

That’s it for this week. I’ll come back and add some additional detail as I get a better understanding of what some of these commands do.

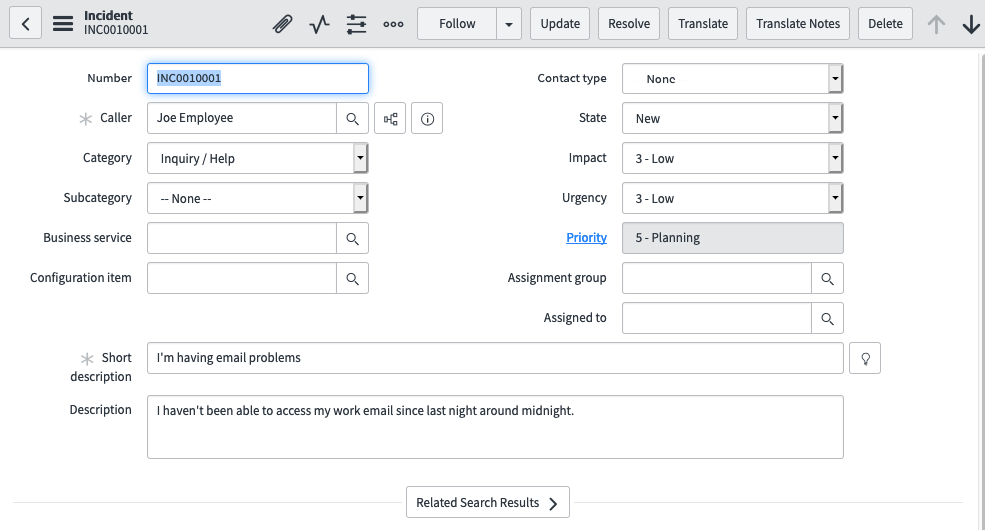

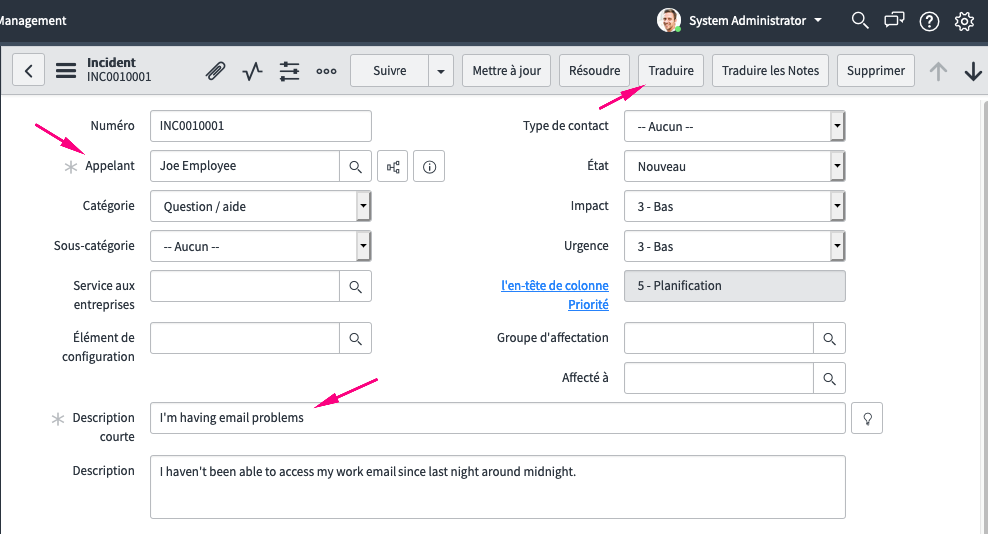

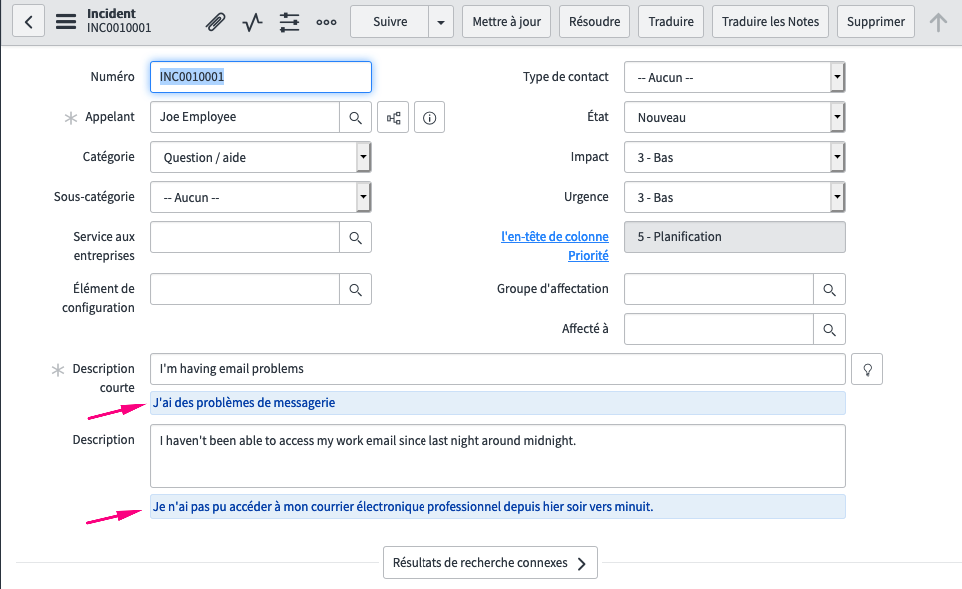

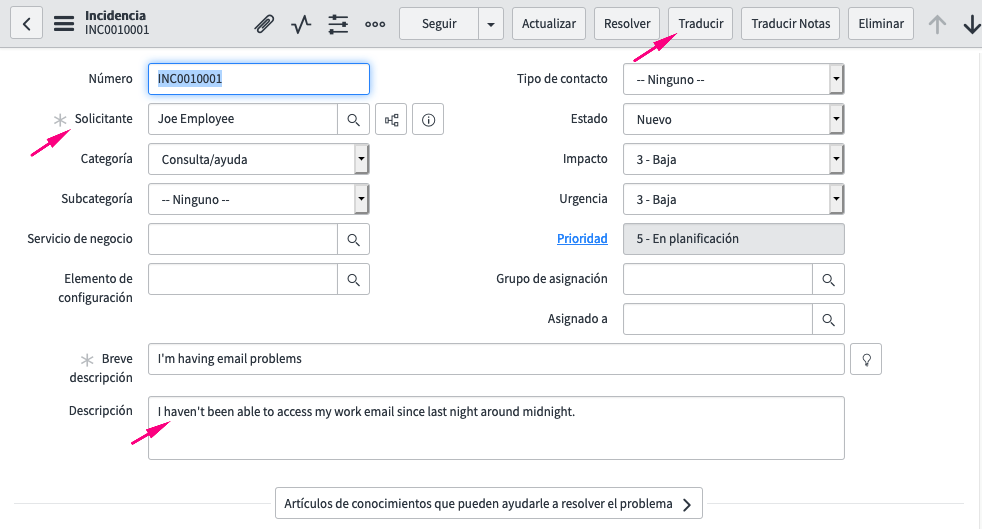

Next, you can see a screenshot showing the same incident, but with the user’s language switched to French. You’ll notice that the labels are translated (‘Caller’ is changed to ‘Appelant’), which is standard with the French Translation plugin.

Next, you can see a screenshot showing the same incident, but with the user’s language switched to French. You’ll notice that the labels are translated (‘Caller’ is changed to ‘Appelant’), which is standard with the French Translation plugin.

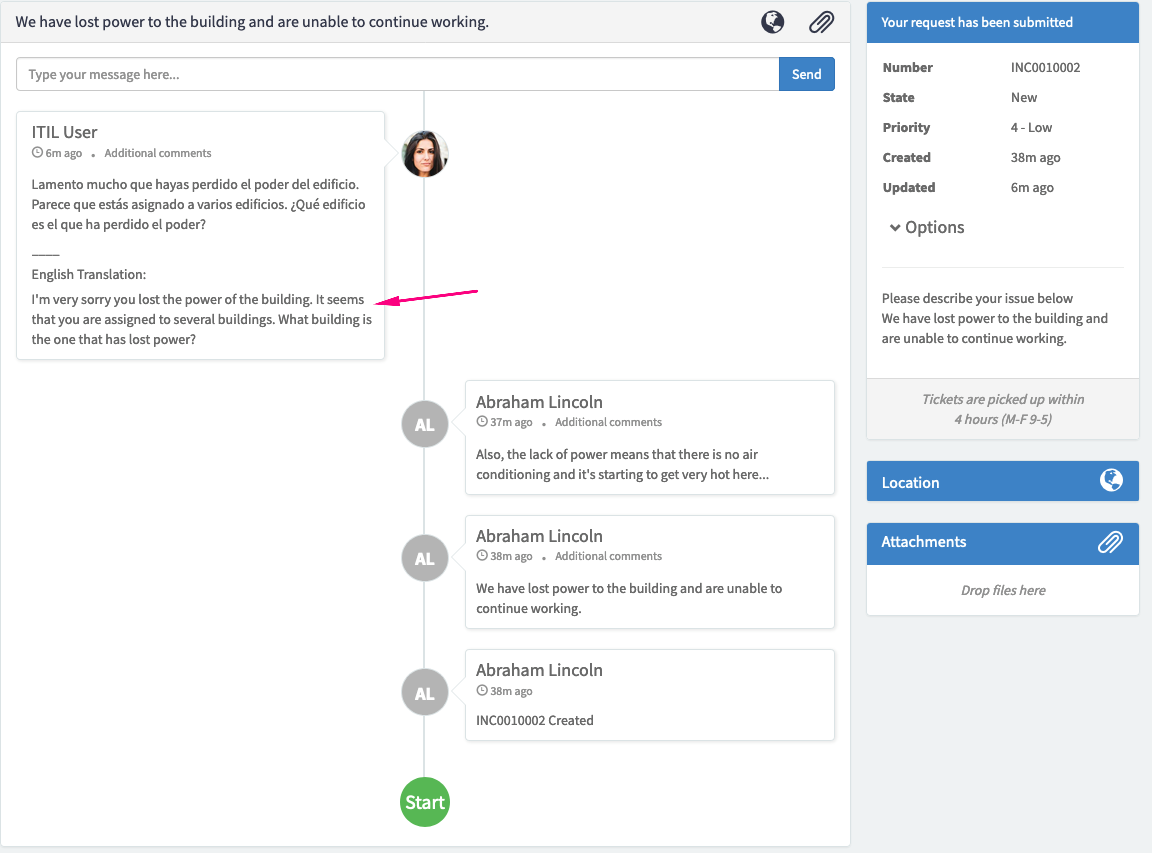

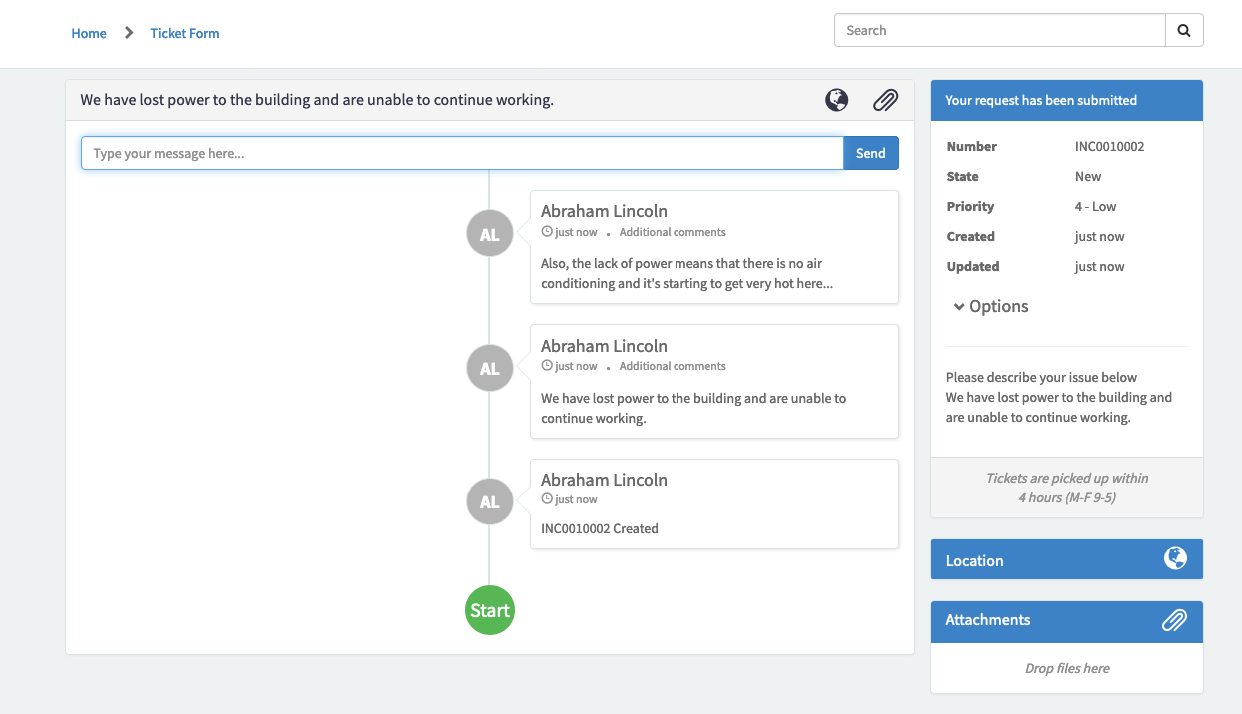

Below is a screen shot of a new incident, once again in English. This time from the service portal.

Below is a screen shot of a new incident, once again in English. This time from the service portal.

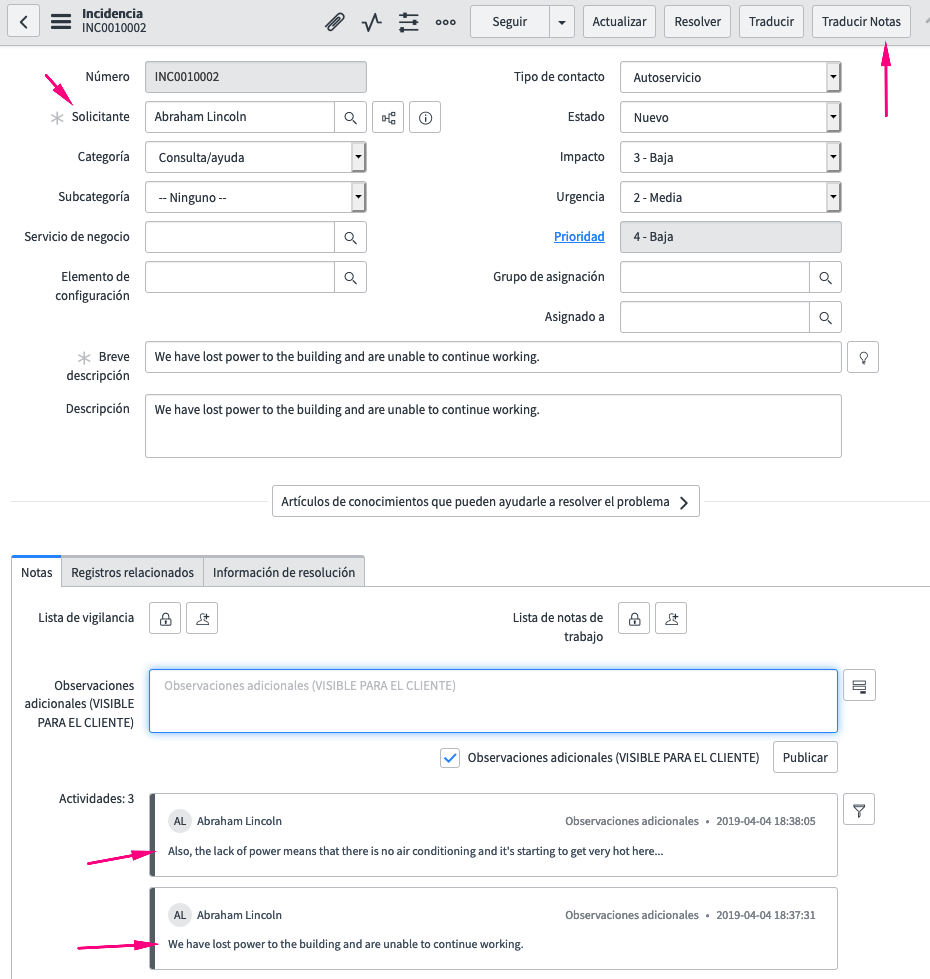

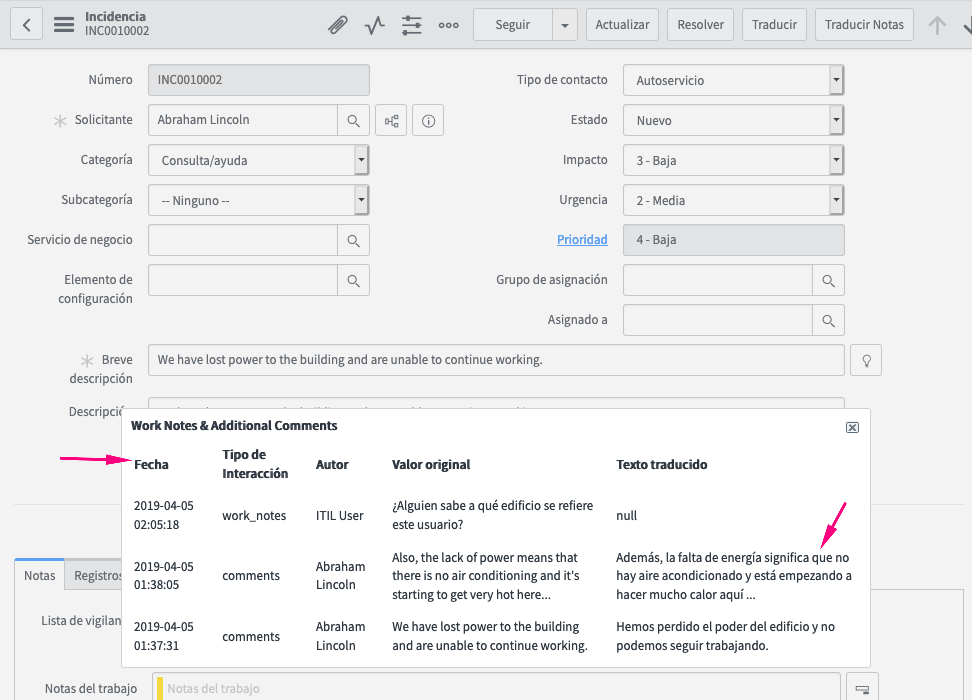

Clicking the ‘Translate Notes’/’Traducir Notas’ button brings up a UI Page with the original value in English in the 4th column, and the Spanish translation in the 5th column.

Clicking the ‘Translate Notes’/’Traducir Notas’ button brings up a UI Page with the original value in English in the 4th column, and the Spanish translation in the 5th column. Finally, here is the ESS view with an English translation for the Spanish additional comments response which was input by the ITIL User.

Finally, here is the ESS view with an English translation for the Spanish additional comments response which was input by the ITIL User.